I am a first-year CS Ph.D. student at the School of Data Science, Chinese University of Hong Kong, Shenzhen (CUHK-SZ), supervised by Prof Kui Jia. I received my Bachelor’s degree in University of Electronic Science and Technology of China (UESTC, 2014 - 2018), and Master’s degree in Nanyang Technological University (NTU, 2018-2019), Singapore. Before joining CUHK-SZ, I worked as a R&D engineer at DexForce Technology, where I lead the development of DexVerseTM, the world’s leading Sim2Real AI Platform for Embodied Intelligence.

My research interests are mainly in the following areas:

- Systems:

- High-performance, Heterogeneous and GPU-accelerated Simulation Engine Architecture

- Data Generation and Model Training Systems for Embodied Intelligence

- Simulation:

- Generative Model for Simulation

- Differentiable Rendering and Physics

- Neural Representation for Simulation

- Embodied Intelligence:

- Physics-Structured Model Architecture

- Online and Continual Learning for Embodied Agents

- Sim2Real Transfer and Domain Adaptation

Projects

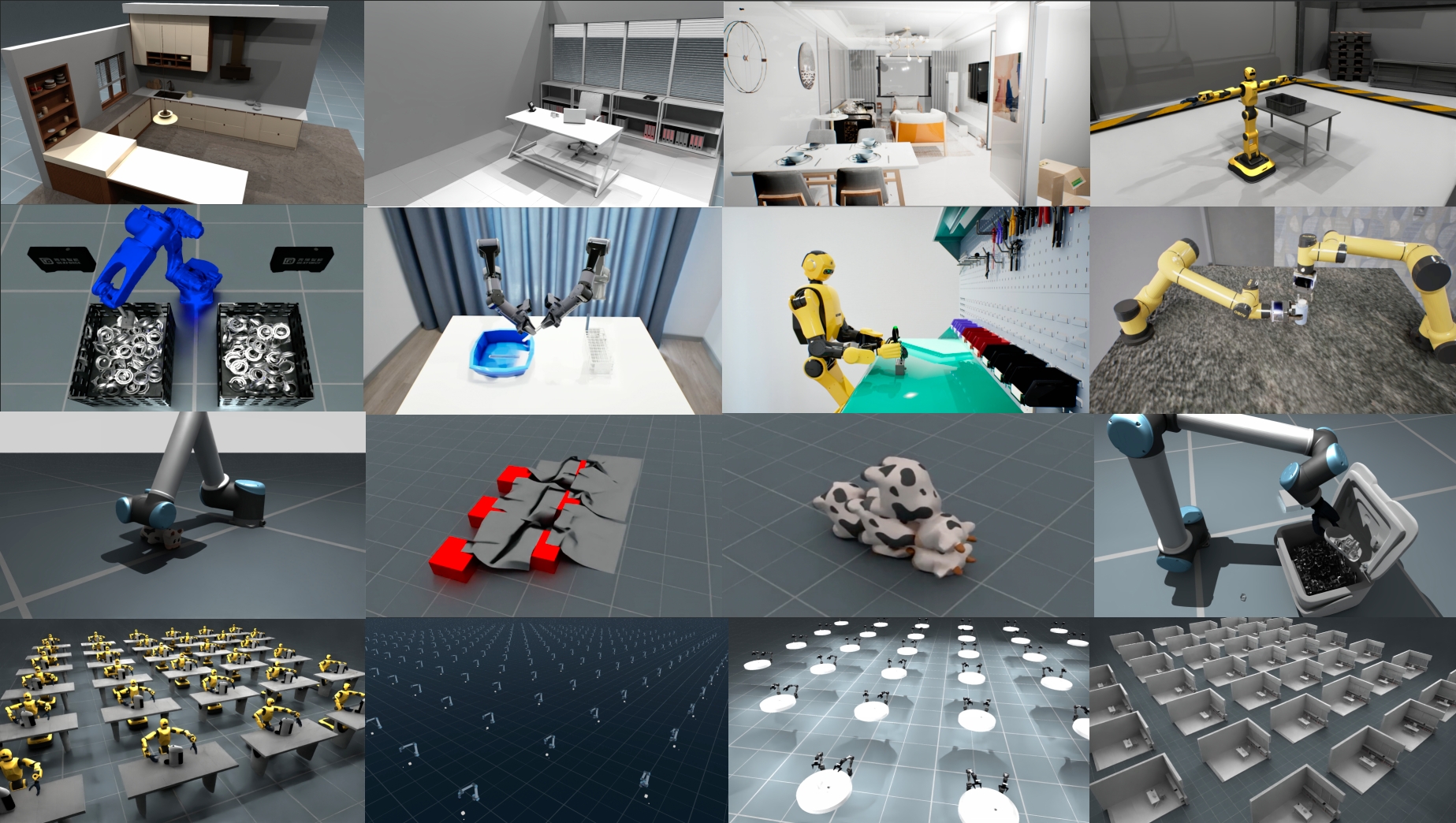

| EmbodiChain: An end-to-end, GPU-accelerated, and modular platform for building generalized Embodied Intelligence Description: EmbodiChain is a unified, GPU-accelerated framework designed for pushing the boundaries of embodied AI research and development. It integrates high-performance simulation, data collection via real-to-sim techniques, data scaling pipeline, modular model architectures, and efficient training & evaluation tools. All of these components work seamlessly together to facilitate rapid experimentation and deployment of embodied intelligence and perform Sim2Real transfer into real-world robotic systems. |

| Open3D: A Modern Library for 3D Data Processing Website | Code Description: The leading open-source library for 3D processing with 400K+ monthly downloads from PyPI. Open3D exposes a set of carefully selected data structures and algorithms in both C++ and Python for 3D data processing tasks including point cloud processing, mesh processing, and 3D visualization. |

Publications

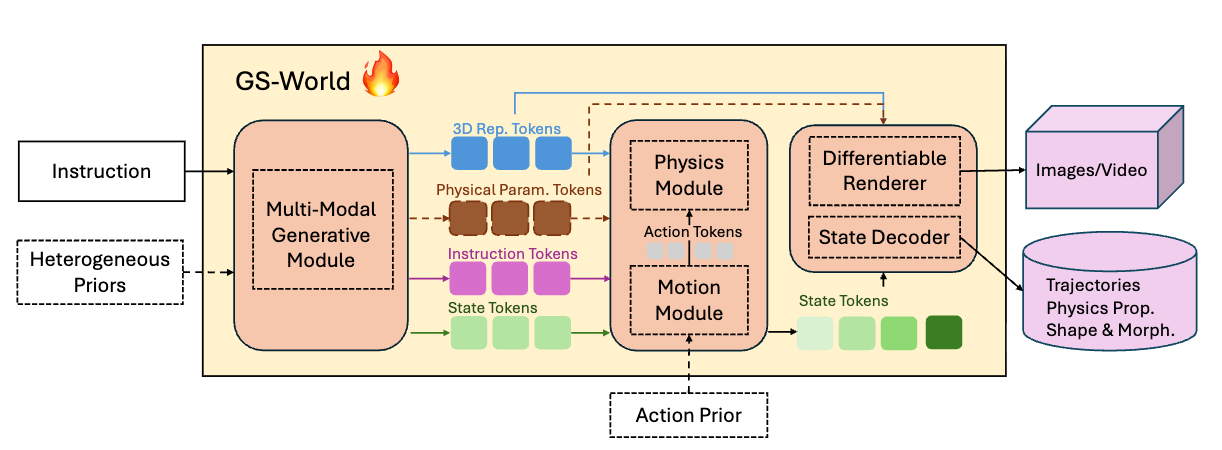

| GS-World: An Engine-driven Learning Paradigm for Pursuing Embodied Intelligence using World Models of Generative Simulation Position paper Guiliang Liu, Yueci Deng, Zhen Liu, Kui Jia Paper |

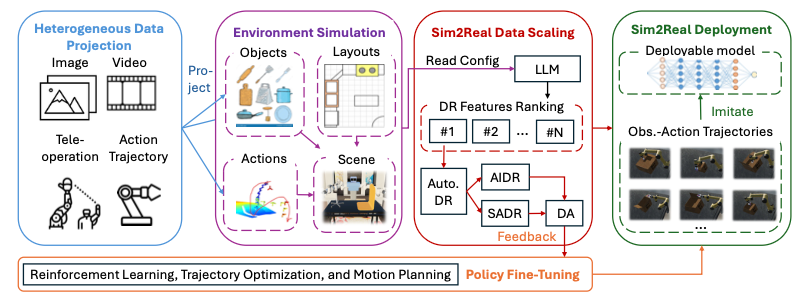

| DexScale: Automating Data Scaling for Sim2Real Generalizable Robot Control Guiliang Liu*, Yueci Deng*, Runyi Zhao, Huayi Zhou, Jian Chen, Jietao Chen, Ruiyan Xu, Yunxin Tai, Kui Jia (*Equal contribution) International Conference on Machine Learning (ICML), 2025 Poster Paper / Code Description: A novel data engine for automating data generation and scaling for sim-to-real transfer of robotic manipulation tasks. |

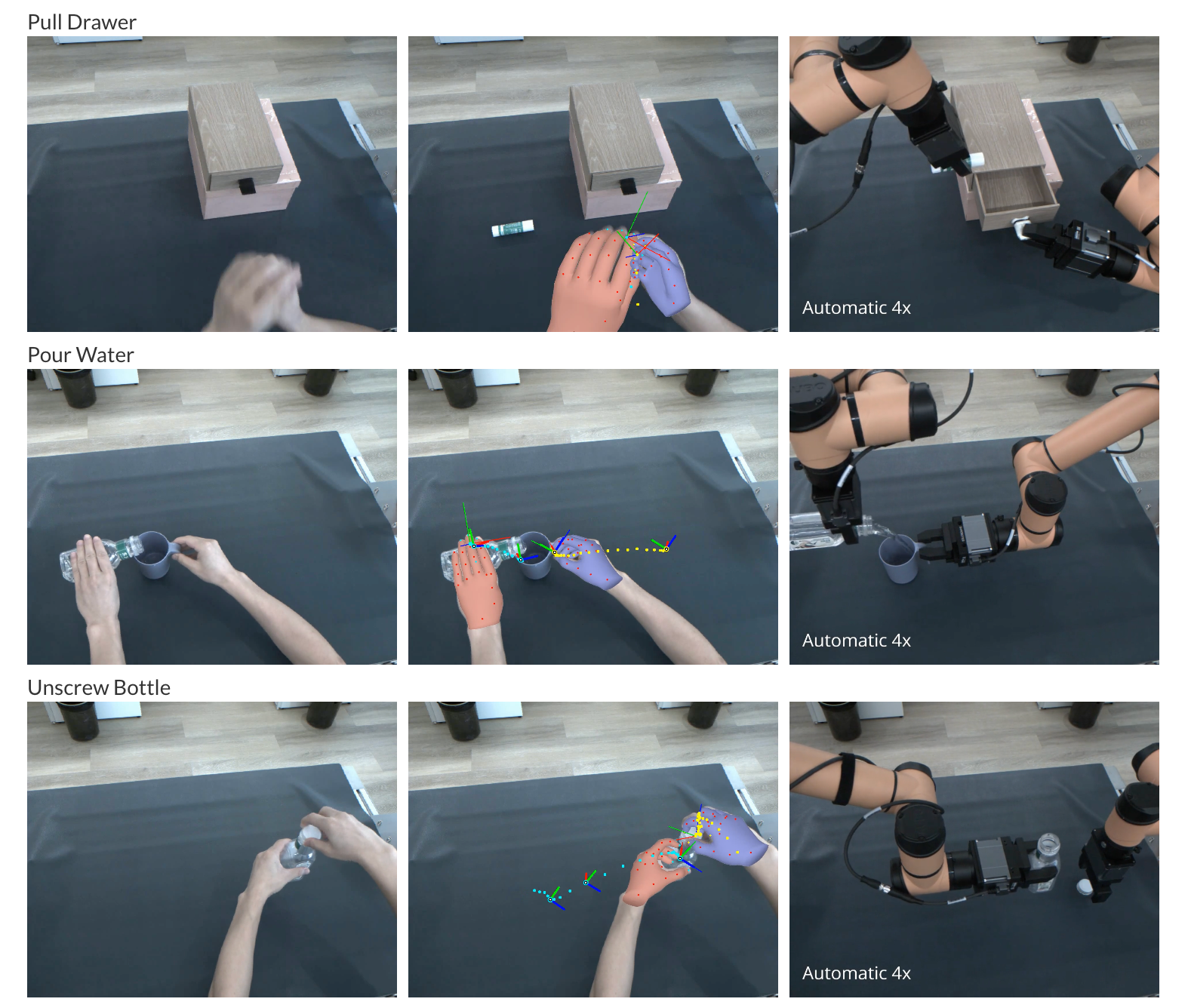

| You Only Teach Once: Learn One-Shot Bimanual Robotic Manipulation from Video Demonstrations Huayi Zhou, Ruixiang Wang, Yunxin Tai, Yueci Deng, Guiliang Liu, Kui Jia Robotics: Science and Systems (RSS), 2025 Paper / Code Description: This work proposes the YOTO (You Only Teach Once), which can extract and then inject patterns of bimanual actions from as few as a single binocular observation of hand movements, and teach dual robot arms various complex tasks. |